At OpenInfer, we are a team of experts in hardware optimization and enterprise-grade software development, dedicated to redefining AI inference.

With deep expertise in GPU architecture, compiler optimization, and system efficiency, we build high-performance inference solutions that empower developers to run AI models efficiently on any hardware. Our mission is to unlock scalable, cost-effective, and private AI inference for businesses, enabling seamless integration across client and cloud environments.

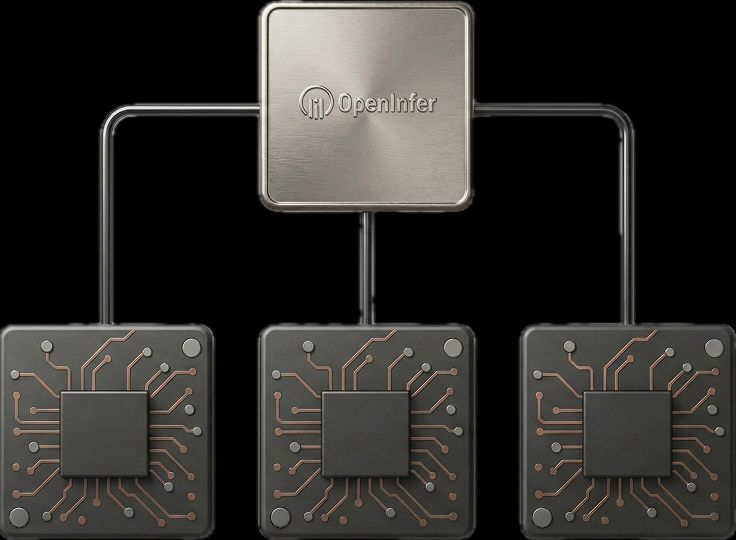

How we are looking at edge inference

An Edge AI Inference Infrastructure

-

BehnamCo-Founder & CEO

BehnamCo-Founder & CEODr. Bastani has 20+ years of experience in AI in constrained compute platforms. He was previously Senior / Director of Engineering for AI & ML at Roblox and Meta and has shipped AI Engines at scale at Meta, Google and Roblox.

-

RezaCo-Founder & CTO

RezaCo-Founder & CTOReza Nourai brings over 20+ years of experience in GPU and large scale memory architectures. He has led major industry breakthroughs at Meta, Microsoft, Roblox and Magic Leap.

Meet our team

-

Sam

Sam -

Onkar

Onkar -

Claire

Claire -

Nikolay

Nikolay -

Jennifer

Jennifer -

Steven

Steven -

Chongyi

Chongyi -

Varun

Varun

Advisors

-

Manish

Manish -

Neal

Neal -

Baris

Baris -

Ogi

Ogi -

Gokul

Gokul