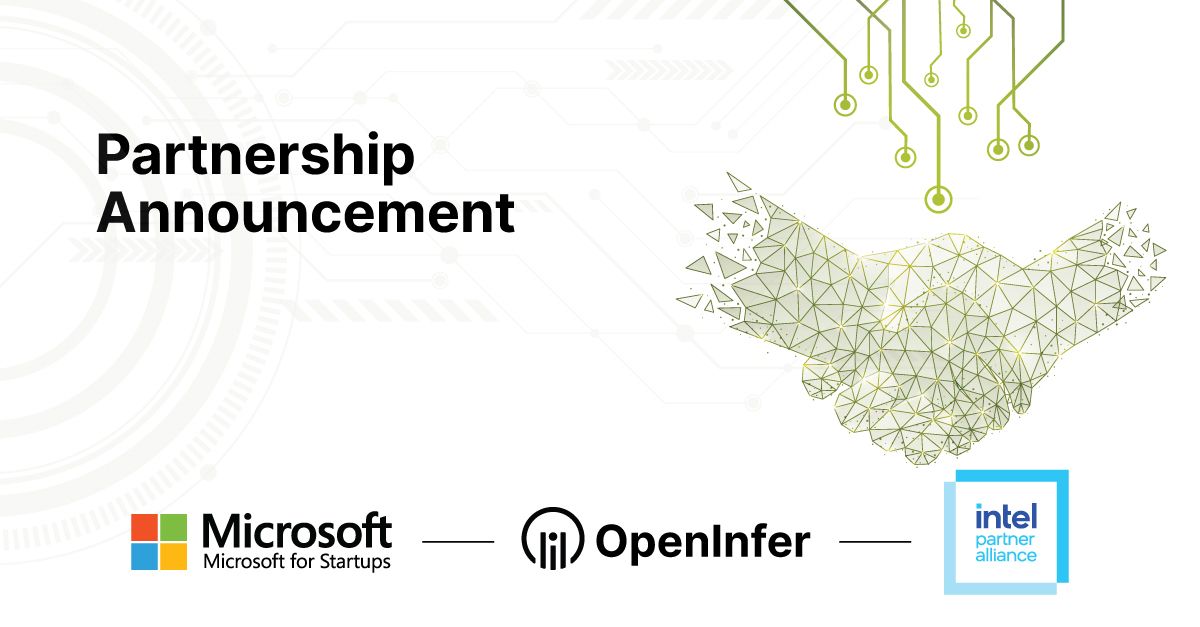

OpenInfer Joins Forces with Intel® and Microsoft to Accelerate the Future of Collaboration in Physical AI

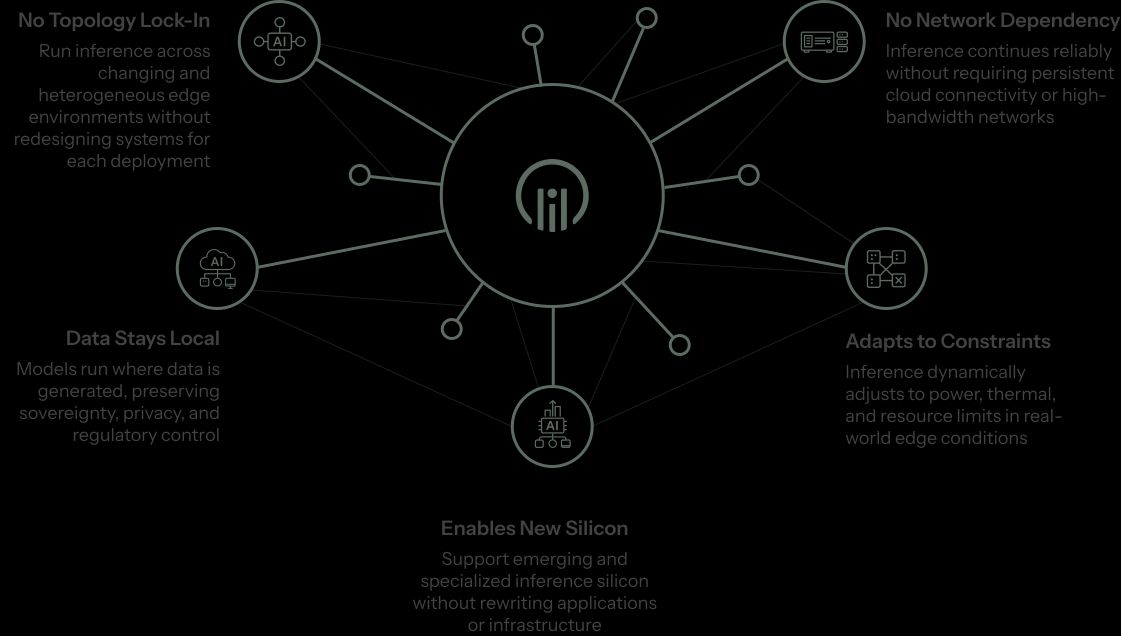

Today, we’re excited to share a big step forward for OpenInfer: we’ve officially joined the Intel® Partner Alliance and Microsoft’s Pegasus Program. These are two of the most influential innovation...